Monitoring and control

The safe and efficient operation of today's industrial facilities and infrastructure increasingly requires continuous monitoring and control of ongoing processes and situations. With the rapid growth in the volume of recorded data—most of which must be processed in real time—AI technologies are becoming not just relevant, but practically indispensable in this field.

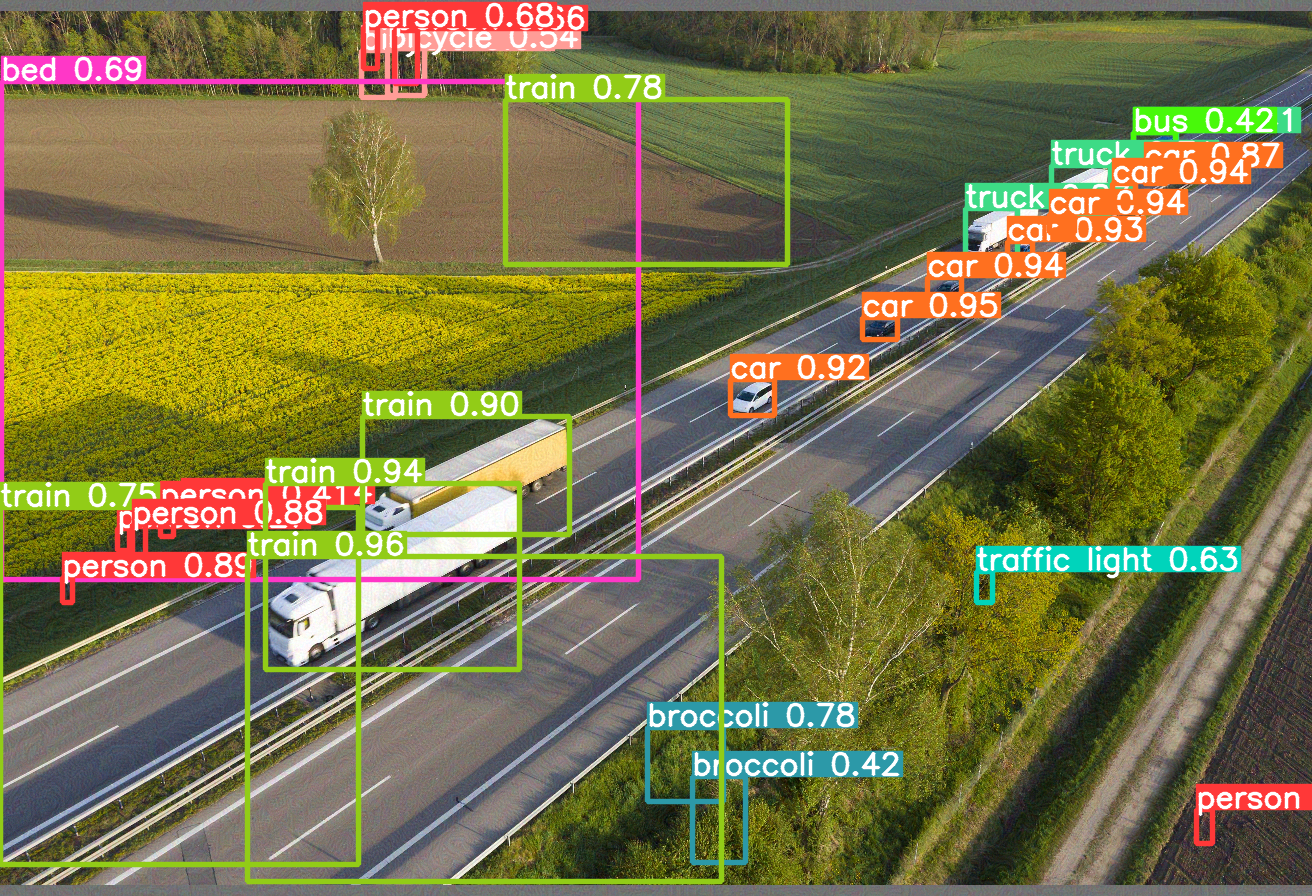

AI can serve both as an assistant to a monitoring and control system operator and as a decision-making system itself, provided this does not pose risks to humans or the environment. Typical monitoring and control tasks handled by AI include object, person, and situation recognition from surveillance camera data (video analytics), monitoring of production processes in enterprises using sensor telemetry, and non-destructive testing of equipment.

Example of a video analytics system monitoring road infrastructure: original and processed images

Standardization

The standardization of AI for monitoring and control tasks is currently in an active phase of development. In Russia, notable standards include a series of GOST regulations in the field of intelligent transport infrastructure management [1, 2, 3], situational video analytics [4], human behavior monitoring and intent prediction [5, 6], and recognition and classification of road transport objects [7, 8]. There's no doubt that in the coming years, both in Russia and abroad, a regulatory framework for the security of AI monitoring and control systems will be fully established.

AI threats in monitoring and control

The first stage of the AI system lifecycle involves preparing data for AI model training. The data must meet standard requirements, including accuracy, completeness, security, and unbiasedness [9-11]. Any deviation from these requirements can result in AI models with low accuracy, poor reliability, and weak generalization properties [10].

For AI systems that monitor people, requirements for confidentiality, ethics, and personal data protection are critically important and mandatory. The European Union holds the strictest position on this issue. Under the EU Artificial Intelligence Act (EU AI Act) [12], several AI applications related directly or indirectly to human monitoring are classified as unacceptable risks to citizens' rights and freedoms and are therefore banned in the EU [13]. Prohibited AI practices include:

- creating or expanding facial recognition databases by indiscriminately collecting facial images from surveillance cameras or the internet;

- emotion detection in employment and educational settings;

- real-time remote biometric identification in public places (except in legally defined cases).

The next stage in the AI system lifecycle is model creation and training, which should ideally take place in secure software environments using trusted software. It's worth noting the widespread use of open-source YOLO (You Only Look Once) models in video analytics [14, 15]. These are deep neural networks for object detection and recognition. It's essential to understand that "neural networks downloaded from the internet"—even with additional training or modification of the model—may contain personal data theft modules, triggers that induce undeclared behavior in the model, or other malicious functionalities. Only a thorough analysis of the topology and internal logic of such models can ensure their security.

During the training stage, AI models must be protected from input data attacks [16], including so-called adversarial attacks. For video analytics tasks, this latter type of attack is especially relevant. Most adversarial attacks aim to poison input images in order to alter the model's output while keeping the modified images visually indistinguishable from the originals. The sheer volume of successful attacks on input data, their diversity, and the ability to target specific types of neural networks present a major challenge for AI security methods—primarily adversarial training techniques [17, 18]

Example of an adversarial attack on a video analytics system

Once the model is created, it must undergo rigorous testing following a predefined methodology [10].For models whose vulnerabilities pose the greatest threats, external testing by AI security experts may also be required.

The key to ensuring the security of your AI solution is understanding its specific vulnerabilities and strictly following all AI security recommendations and methodologies.

References

Expand

- 1. GOST R 70985-2023 “Artificial intelligence systems in road transport. Intelligent transport infrastructure management systems. Requirements for testing number plates recognition algorithms”.

- 2. GOST R 70984-2023 “Artificial intelligence systems in road transport. Intelligent transport infrastructure management systems. Requirements for testing road condition prediction algorithms”.

- 3. GOST R 70983-2023 “Artificial intelligence systems in road transport. Intelligent transport infrastructure management systems. Requirements for testing traffic forecasting algorithms”.

- 4. GOST R 59385-2021. “Information technology. Artificial intelligence. Situational video analytics. Terms and definitions”.

- 5. GOST R 58776-2019. “Means of monitoring behavior and predicting people’s intentions. Terms and definitions”.

- 6. GOST R 59391-2021. “Means of monitoring behavior and predicting people’s intentions. Artificial intelligent systems for motor vehicles. Classification, purpose, composition and characteristics of photo and video recorders”.

- 7. GOST R 70321.6-2022. “Artificial intelligence technologies for processing of Earth remote sensing data. Artificial intelligence algorithms for recognition of objects of road networks on satellite images obtained from optical-electronic observation satellites. Typical testing procedure”.

- 8. GOST R 70321.7-2022. “Artificial intelligence technologies for processing of Earth remote sensing data. Artificial intelligence algorithms for classification types of objects of road networks on satellite images obtained from optical-electronic observation satellites. Typical testing procedure”.

- 9. GOST R 70889-2023 “Information technology. Artificial intelligence. Data life cycle framework”.

- 10. GOST R 59898-2021 “Quality assurance of artificial intelligence systems. General”.

- 11. ISO/IEC TR 24027:2021 “Information technology — Artificial intelligence (AI) — Bias in AI systems and AI aided decision making”.

- 12. https://artificialintelligenceact.eu/

- 13. https://artificialintelligenceact.eu/article/5/

- 14. Redmon J. You only look once: Unified, real-time object detection //Proceedings of the IEEE conference on computer vision and pattern recognition. – 2016.

- 15. G. Jocher, A. Chaurasia, and J. Qiu, “YOLO by Ultralytics.” https://github.com/ultralytics/ultralytics, 2023. Accessed: February 30, 2023

- 16. PNST 836-2023 (ISO/IEC TR 5469) "Artificial Intelligence. Functional Safety and AI Systems".

- 17. I. Reyes-Amezcua, G. Ochoa-Ruiz, and A. Mendez-Vazquez, “Enhancing image classification robustness through adversarial sampling with delta data augmentation (dda),” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 274–283.

- 18. Zhao M. et al. Adversarial Training: A Survey //arXiv preprint arXiv:2410.15042. – 2024.