Manufacturing

The integration of AI technologies into manufacturing is another step toward increased efficiency, cost reduction, and a sustainable future. AI is rapidly transforming existing manufacturing processes, making them safer, more cost-effective, faster, more accurate, and more flexible.

Here are just a few key applications of AI in manufacturing:

Automation of routine tasks and processes in hazardous environments

Data analytics for predictive equipment maintenance and failure forecasting

Logistics optimization, demand forecasting, and supply chain management

Detection of defects during production

Modeling and digital twins

Optimization of resource consumption

(electricity, water, etc.)

Thus, AI-driven intelligent automation enables enterprises to minimize downtime, optimize resource usage, and improve quality control.

However, alongside its evident advantages, the implementation of AI in manufacturing also introduces additional risks, requiring special attention to the cybersecurity and reliability of AI systems [1, 2].

AI threats in manufacturing

- Traditional cyberattacks aimed at hacking AI systems to sabotage production or steal data.

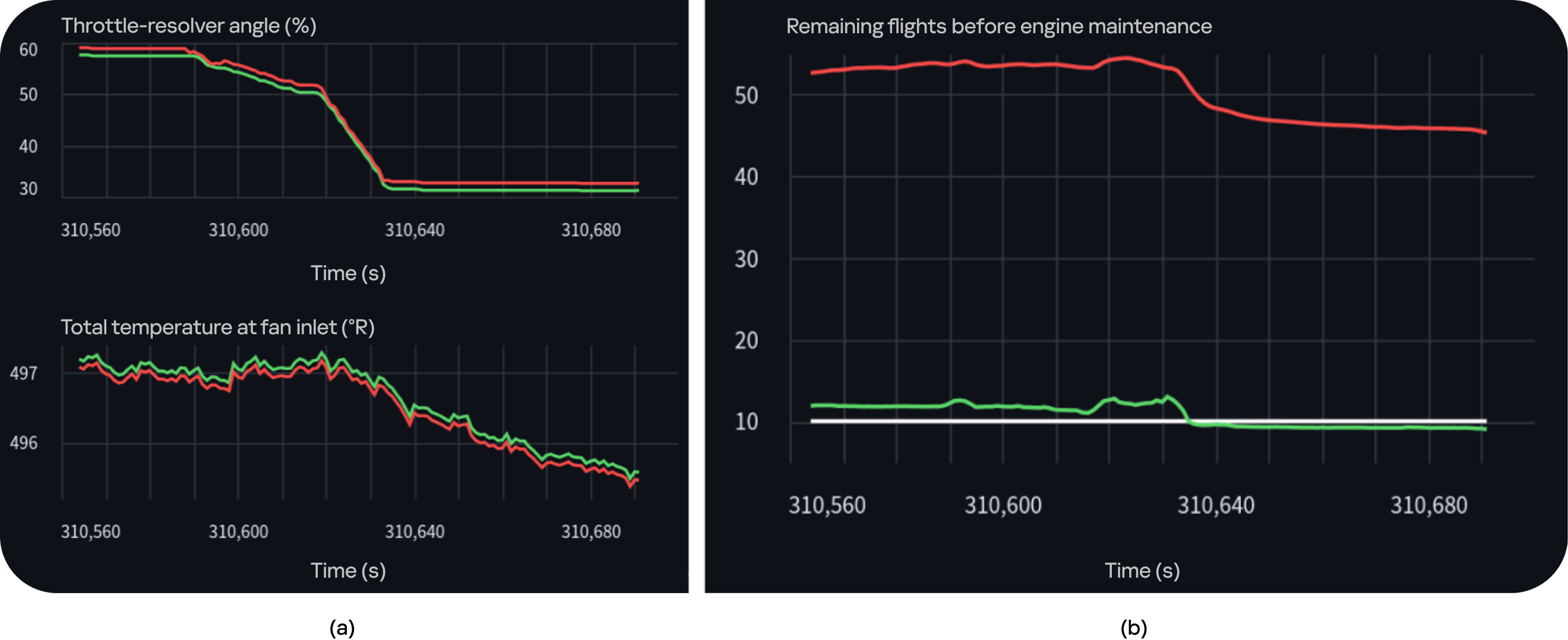

- Vulnerabilities in AI models and algorithms. For example, malicious manipulation of input data (adversarial attacks [4, 5, 6]) may cause a model for predicting equipment degradation to generate incorrect forecasts (Fig. 1), leading to excessive maintenance costs or accidents.

- Bias in training data, which can arise either intentionally through attacks on the AI system or unintentionally due to developers' insufficient understanding of potential sources of data bias. A dedicated standard [3] outlines methods for mitigating bias in AI systems.

- Data drift—i.e., gradual changes in the statistical properties of input data—or the presence of "out-of-distribution" examples, i.e., data significantly different from the model's training distribution.

- Threats to the physical safety of personnel and equipment. For example, a robotic arm may fail to recognize a person in the work zone and cause injury or damage equipment during maintenance. Additionally, this includes risks of conflicts between human and AI decisions. For instance, AI might prevent an emergency shutdown in the name of "optimization."

Fig. 1—Example of an adversarial attack on a turbofan aircraft engine degradation prediction system (NASA C-MAPSS-2 Turbofan Engine Degradation Simulation Data Set-2, https://paperswithcode.com/dataset/nasa-c-mapss-2): a) Clean (green line) vs. attacked (red line) input data, shown for two indicators—throttle-resolver angle and total temperature at fan inlet; b) Prediction of remaining flights before engine maintenance using clean (green line) vs. attacked (red line) data, with the actual value shown as a white line.

Another risk specific to industrial AI is the potential long-term consequences of AI errors. If an AI system optimizes an industrial process with slight deviations (for example, the composition of an alloy), the consequences may only become apparent months or even years later.

Given these risks, ensuring AI safety in manufacturing goes beyond traditional IT system protection. It requires a comprehensive approach, combining standard cybersecurity measures with those specific for AI threats. It is essential to control the security of AI data supply chains [7], ensure the resilience of algorithms to malicious interference, and continuously monitor model performance for anomalies. Only such a multi-layered approach can ensure that AI remains both a powerful and a safe tool for automating manufacturing processes.

References

Expand

- 1. PNST 776-2022 (ISO/IEC FDIS 23894) ”Information technology — Artificial intelligence — Guidance on risk management”.

- 2. PNST 836-2023 (ISO/IEC TR 5469), "Artificial Intelligence. Functional Safety and AI Systems".

- 3. PNST 839-2023 (ISO/IEC TR 24027:2021). «Information technology — Artificial intelligence (AI) — Bias in AI systems and AI aided decision making».

- 4. Szegedy C. et al. Intriguing properties of neural networks //arXiv preprint arXiv:1312.6199. – 2013.

- 5. Goodfellow I. J., Shlens J., Szegedy C. Explaining and harnessing adversarial examples //arXiv preprint arXiv:1412.6572. – 2014.

- 6. Madry A. et al. Towards deep learning models resistant to adversarial attacks //arXiv preprint arXiv:1706.06083. – 2017.

- 7. GOST R 70889-2023 (ISO/IEC 8183:2023) “Information technology. Artificial intelligence. Data life cycle framework”.